fastText & faiss

文章目录

主要介绍 fastText、faiss 两个常用的工具,然后顺带介绍一下容易混淆的概念 k-means 和knn。

fastText

fastText结合了自然语言处理和机器学习中最成功的理念。这些包括了使用词袋以及n-gram袋表征语句,还有使用子字(subword)信息,效果上的提升。另外采用了一个softmax层级(利用了类别不均衡分布的优势)来加速运算过程。

fastText 主要是用来解决 word representations和 sentence classification. 有趣的是前者是无监督的学习方式,后者是有监督的学习方式。分别主要来自 ”Enriching Word Vectors with Subword Information“ 和 “Bag of Tricks for Efficient Text Classification” 两篇论文。并且使用的是 shallow neural network 而不是深度网络。

FastText is a library created by the Facebook Research Team for efficient learning of word representations and sentence classification.

Take off: fastText 方法包含三部分:模型架构、层次 Softmax 和 N-gram 特征。 fasttext 有两个用处: text classification 和 word embedding 。 使用场景:大型数据,高效计算

下面进行细说:

模型架构

这个是总的框架图。

分为两个部分介绍这个网络结构:

从input -> hidden:

输入层输入的是一个已经分词后短文本。短文本中每个词的词向量是由该短文本的one-hot矩阵乘以一个初始化的矩阵w得到的。(原理图:下图是fasttext 运行的时候,这个分词是再处理成单词和n-gram 组成的特征,这个是不需要我们进行显性的操作的)

分为两个部分介绍这个网络结构:

从input -> hidden:

输入层输入的是一个已经分词后短文本。短文本中每个词的词向量是由该短文本的one-hot矩阵乘以一个初始化的矩阵w得到的。(原理图:下图是fasttext 运行的时候,这个分词是再处理成单词和n-gram 组成的特征,这个是不需要我们进行显性的操作的)

从 hidden -> output:

从 hidden -> output:

插播一句,我们经常使用的预训练模型中的weights 是从input-> hidden。

插播一句,我们经常使用的预训练模型中的weights 是从input-> hidden。

Hierarchical Softmax

Hierarchical Softmax 不是fasttext 首创,它的改进之处在于实现结构上基于 huffman 树而不是普通的二叉树,属于运算上的优化。具体说来:利用了类别(class)不均衡这个事实(一些类别出现次数比其他的更多),通过使用 Huffman 算法建立用于表征类别的树形结构。对标签进行编码,能够极大地缩小模型预测目标的数量。

这个是softmax 的原始的计算公式: $$ p \left( w _ { j } | w _ { I } \right) = y _ { j } = \frac { \exp \left( u _ { j } \right) } { \sum _ { j ^ { \prime } = 1 } ^ { V } \exp \left( u _ { j ^ { \prime } } \right) } $$

采用二叉树的结构之后,时间上优化不少。$O ( N) \rightarrow O \left( \log _ { 2 } N \right)$。见下图。

和之前的神经网络模型相比,这里的huffmax树的所有内部节点就类似之前神经网络隐藏层的神经元。其中,根节点的词向量对应我们的投影后的词向量,而所有的叶子节点就类似于之前神经网softmax输出层的神经元。叶子节点的个数就是词汇表的大小.

和之前的相比,从隐藏层到输出层的softmax映射不是一下就完成的,而是沿着 huffman树一步步完成的,因此这种 softmax取名为”Hierarchical softmax”.

N-gram 特征

N-gram是基于这样的思想:某个词的出现依赖于其他若干个词;我们获得的信息越多,预测越准确。我想说,我们每个人的大脑中都有一个N-gram模型,而且是在不断完善和训练的。我们的见识与经历,都在丰富着我们的阅历,增强着我们的联想能力。** N-gram 是一种思想,可以有两种level 的实现,一种是基于 character-level,一种是基于 word-level,前者是扩充了对于”不常见“单词,后者是考虑了部分的词的顺序,都是考虑了”周边“ 信息,用流行的话就是 context 的信息。所以比较难界定 fasttext 训练出来的是不是有比较强的词序。**

N-gram模型是一种语言模型(Language Model,LM),语言模型是一个基于概率的判别模型,它的输入是一句话(单词的顺序序列),输出是这句话的概率,即这些单词的联合概率(joint probability)。

这样的作用,使用N-gram来给文本添加额外的特征获得关于局部词顺序的部分信息。 举个栗子:对于句子:“我 喜欢 喝 咖啡”, 如果不考虑顺序,那么就是每个词,“我”,“喜欢”,“喝”,“咖啡”这五个单词的word embedding求平均。如果考虑2-gram, 那么除了以上五个词,还有“我喜欢”,“喜欢喝”,“喝咖啡”等词。“我喜欢”,“喜欢喝”,“喝咖啡”这三个词就作为这个句子的文本特征。 我们经常见到的场景:输入法的预选词汇。就是可以通过这种方式实现的。

当然使用了更多的特征意味着计算量的增加,计算效率下降,于是该作者提出了两种解决方法:

- 过滤掉低词频

- 使用词粒度代替字粒度。

还是使用上面的句子”我喜欢喝咖啡“,如果使用子粒度的2-gram,那么产生的特征是“我喜”,“喜欢”,“欢喝”,“喝咖”,“咖啡”。如果使用词粒度为2-gram,那么产生的特征是“我喜欢”,“喜欢喝”,“喝咖啡”。

补充一句,subwords就是一个词的character-level的n-gram。比如单词”hello”,长度至少为3的char-level的ngram有”hel”,”ell”,”llo”,”hell”,”ello”以及本身”hello”。

Negative Sampling

该 technique 主要是减轻计算量的角度考虑的,每次让一个训练样本仅仅更新一部分的权重参数,这个技术不是 fastText 首创的,但是本着总结知识点的,也就放在这里了。

CBOW / Skip-gram模型 (这个论文中)提出了两种方法,一种是Hierarchical Softmax,另一种是Negative Sampling。论文中提出的两种方法都是用来提高计算效率的,下面说一下负采样。

在训练神经网络时,每当接受一个训练样本,然后调整所有神经单元权重参数,来使神经网络预测更加准确。换句话说,每个训练样本都将会调整所有神经网络中的参数。而 Negative Sampling 每次让一个训练样本仅仅更新一小部分的权重参数,从而降低梯度下降过程中的计算量。如果 vocabulary 大小为1万时, 当输入样本 ( “fox”, “quick”) 到神经网络时, “ fox” 经过 one-hot 编码,在输出层我们期望对应 “quick” 单词的那个神经元结点输出 1,其余 9999 个都应该输出0。在这里,这9999个我们期望输出为0的神经元结点所对应的单词我们称为 negative word,随机选择一小部分的 negative words,比如选 10个 negative words 来更新对应的权重参数。

解决的问题,在最后一层 softmax 的计算量太大,相当于每一次word 都是需要整个dict 量的级别的更新。然后选择 k 个negative words,只是计算这些softmax 的值。

Training a neural network means taking a training example and adjusting all of the neuron weights slightly so that it predicts that training sample more accurately. In other words, each training sample will tweak all of the weights in the neural network. As we discussed above, the size of our word vocabulary means that our skip-gram neural network has a tremendous number of weights, all of which would be updated slightly by every one of our billions of training samples! Negative sampling addresses this by having each training sample only modify a small percentage of the weights, rather than all of them. Here’s how it works. When training the network on the word pair (“fox”, “quick”), recall that the “label” or “correct output” of the network is a one-hot vector. That is, for the output neuron corresponding to “quick” to output a 1, and for all of the other thousands of output neurons to output a 0. With negative sampling, we are instead going to randomly select just a small number of “negative” words (let’s say 5) to update the weights for. (In this context, a “negative” word is one for which we want the network to output a 0 for). We will also still update the weights for our “positive” word (which is the word “quick” in our current example). The paper says that selecting 5-20 words works well for smaller datasets, and you can get away with only 2-5 words for large datasets. Recall that the output layer of our model has a weight matrix that’s 300 x 10,000. So we will just be updating the weights for our positive word (“quick”), plus the weights for 5 other words that we want to output 0. That’s a total of 6 output neurons, and 1,800 weight values total. That’s only 0.06% of the 3M weights in the output layer! In the hidden layer, only the weights for the input word are updated (this is true whether you’re using Negative Sampling or not).

对应的参数

|

|

其中 wordNgrams 是对应语序,字符minn 和maxn 是解决oov 问题。

- 只用unigram的话会丢掉word order信息,所以通过加入N-gram features进行补充

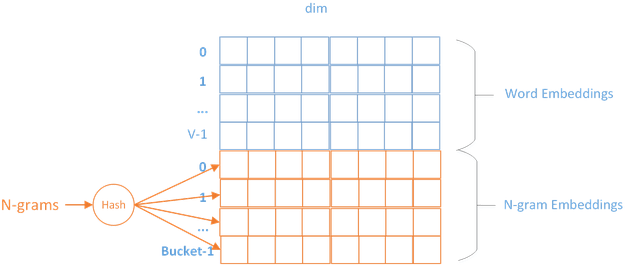

- 用hashing来减少N-gram的存储 由于n-gram的量远比word大的多,完全存下所有的n-gram也不现实。Fasttext采用了Hash桶的方式,把所有的n-gram都哈希到buckets个桶中,哈希到同一个桶的所有n-gram共享一个embedding vector。如下图所示:

Positive samples and Negative samples

One little detail that’s missing from the description above is how do we select the negative samples. (下面说的是如何进行选择negative sample的问题:基本思路是根据出现频率进行选择) The negative samples are chosen using the unigram distribution. Essentially, the probability of selecting a word as a negative sample is related to its frequency, with more frequent words being more likely to be selected as negative samples. Instead of using the raw frequency in the original word2vec paper, each word is given a weight that’s equal to it’s frequency (word count) raised to the 3/4 power. The probability for selecting a word is just it’s weight divided by the sum of weights for all words. $$P \left( w _ { i } \right) = \frac { f \left( w _ { i } \right) ^ { 3 / 4 } } { \sum _ { i = 0 } ^ { n } \left( f \left( w _ { j } \right) ^ { 3 / 4 } \right) }$$

上述中的函数是幂函数,图像的形状和log 函数差不多,都是从 $y =x$ 进行了一下约束,函数变得更加的平缓。对于高频词进行了约束,对于低频次也有机会出现。

This decision to raise the frequency to the 3/4 power appears to be empirical; as the author claims it outperformed other functions (e.g. just using unigram distribution). Side note: The way this selection is implemented in the original word2vec C code is interesting. They have a large array with 100M elements (which they refer to as the unigram table). They fill this table with the index of each word in the vocabulary multiple times, and the number of times a word’s index appears in the table is given by

Then, to actually select a negative sample, we just generate a random integer between 0 and 100M, and use the word at that index in the table. Since the higher probability words occur more times in the table, we’re more likely to pick those.

这个也是有讲 任何进行negative sample的选择 http://jalammar.github.io/illustrated-word2vec/ 一般来说在 word2vec 中context 是会选择到 5,然后这个 positive / negative sample 会是(1/6), 然后 nagative sample 是随机在 dictionary里面选的(所以有可能选到 positive sample), 这个是这个dictionary 是根据频率,出现次数越多的,被选中的可能性也越大。 The number of negative samples is another factor of the training process. The original paper prescribes 5-20 as being a good number of negative samples. It also states that 2-5 seems to be enough when you have a large enough dataset. The Gensim default is 5 negative samples.

To address this, we need to introduce negative samples to our dataset – samples of words that are not neighbors. Our model needs to return 0 for those samples. Now that’s a challenge that the model has to work hard to solve – but still at blazing fast speed. This idea is inspired by Noise-contrastive estimation. We are contrasting the actual signal (positive examples of neighboring words) with noise (randomly selected words that are not neighbors). This leads to a great tradeoff of computational and statistical efficiency.

Essentially, the probability for selecting a word as a negative sample is related to its frequency, with more frequent words being more likely to be selected as negative samples.

They fill this table with the index of each word in the vocabulary multiple times, and the number of times a word’s index appears in the table is given by $P(wi)*P(wi)$ table_size. Then, to actually select a negative sample, you just generate a random integer between 0 and 100M, and use the word at that index in the table. Since the higher probability words occur more times in the table, you’re more likely to pick those.

(ps 这种数量比不是 1:1,常常是 positive : negative =1:5, 这个是经验值,在传统机器学习中可能认为是 data unbalanced) It’s now time to build out our skip-gram generator which will give us pair of words and their relevance

- (word, word in the same window), with label 1 (positive samples).

- (word, random word from the vocabulary), with label 0 (negative samples).

使用

第一个应用场景:词向量。 fastText作为训练词向量认为可以有两种模式,一种是根据周围词语预测中心词汇的CBOW (continuous bag-of-words)模型,另一种是根据中心词汇预测上下文的 skip-gram 模型。

./fasttext – It is used to invoke the FastText library. skipgram/cbow – It is where you specify whether skipgram or cbow is to be used to create the word representations. -input – This is the name of the parameter which specifies the following word to be used as the name of the file used for training. This argument should be used as is. data.txt – a sample text file over which we wish to train the skipgram or cbow model. Change this name to the name of the text file you have. -output – This is the name of the parameter which specifies the following word to be used as the name of the model being created. This argument is to be used as is. model – This is the name of the model created. Running the above command will create two files named model.bin and model.vec. model.bin contains the model parameters, dictionary and the hyperparameters and can be used to compute word vectors. model.vec is a text file that contains the word vectors for one word per line.

最后生成有两个文件,一个 xxx.bin 文件,一个是 xxx.vec 文件,前者是预训练模型,后者是词向量。 这两个可能是最重要的格式了。

The most important parameters of the model are its dimension and the range of size for the subwords.

常见的代码格式:

./fasttext skipgram -input data/fil9 -output result/fil9 -minn 2 -maxn 5 -dim 300

跑偏一下说一下shell的小技巧。 使用echo 或者 < 这样进行单个词或者多个词的词向量的查询。

./fasttext print-word-vectors model.bin < queries.txt echo “word” | ./fasttext print-word-vectors model.bin

Finding simialr words:

./fasttext nn model.bin

最重要的几个参数:

The most important parameters of the model are its dimension and the range of size for the subwords. The dimension (dim) controls the size of the vectors, the larger they are the more information they can capture but requires more data to be learned. But, if they are too large, they are harder and slower to train. By default, we use 100 dimensions, but any value in the 100-300 range is as popular. The subwords are all the substrings contained in a word between the minimum size (minn) and the maximal size (maxn). By default, we take all the subword between 3 and 6 characters, but other range could be more appropriate to different languages:

|

|

The following arguments for the dictionary are optional:

-minCount 词出现的最少次数 [5] -minCountLabel 标签出现的最少次数 [0] -wordNgrams 单词 ngram 的最大长度 [1] -bucket 桶的个数 [2000000] -minn char ngram 的最小长度 [3] -maxn char ngram 的最大长度 [6]

The following arguments for training are optional

-dim 字向量的大小 [100] -ws 上下文窗口的大小 [5] -epoch 迭代次数 [5] -neg 负样本个数 [5] -loss 损失函数 {ns, hs, softmax} [ns]

第二个应用场景:文本分类。

Sentiment analysis and email classification are classic examples of text classification

(BERT 也是采用的这种label 的格式) 在训练数据集中label 默认是使用 “_label_” 进行表示的,当然也是可以进行自定义的。

./fasttext supervised -input train.ft.txt -output model_kaggle -label __label__ -lr 0.5

就是进行predict的时候,有时候并不是很能想起来只是predict top 3 这样的东西。

# Predicting on the test dataset

./fasttext predict model_kaggle.bin test.ft.txt

# Predicting the top 3 labels

./fasttext predict model_kaggle.bin test.ft.txt 3

fasttext VS. CBOW

在标准的多核CPU上, 能够训练10亿词级别语料库的词向量在10分钟之内,能够分类有着30万多类别的50多万句子在1分钟之内。

n-gram

n-gram 是一种基于语言模型的算法,基本思想是将文本内容按照字节顺序进行大小为N的滑动窗口操作,最终形成长度为N的字节片段序列。

CBOW 是和词序无关的,实现 n-gram 作为额外的特征可以捕捉一些部分的词序。fastText是一种基于skip-gram模型的新扩展,它会使用subword的信息,将每个词被表示成一个字符级n-gram词袋(a bag of character n-grams)。每个向量表示与每个字符级n-gram相关联,而词(word)则可以看成是这些n-gram向量表示的求和(sum)。fastText在大语料上训练很快。

** 网络结构方面**

- 输入层:CBOW 的输入层是由目标词汇 $y$ 的上下文单词 ${ x _ { 1 } , \ldots , x _ { c } }$ 组成, $\boldsymbol { x } _ { i }$ 是被 onehot 编码过的 V 维向量,其中 V 是词汇量。而fasttext 的输入是多个单词及其n-gram特征。比如说,对于单词“apple”,假设n的取值为3,则它的trigram有:

“<ap”, “app”, “ppl”, “ple”, “le>” 其中,<表示前缀,>表示后缀。于是,我们可以用这些trigram来表示“apple”这个单词,进一步,我们可以用这5个trigram的向量叠加来表示“apple”的词向量。 这样做有两点好处:

- 对于低频词生成的词向量效果会更好。因为它们的n-gram可以和其它词共享。

- 对于训练词库之外的单词,仍然可以构建它们的词向量。我们可以叠加它们的字符级n-gram向量。

- 从输入层到隐藏层,CBOW会将上下文单词向量叠加起来并经过一次矩阵乘法(线性变化)并应用激活函数,而fastText省略了这一过程,直接将embedding过的向量特征求和取平均;

- 两者都是使用的层次softmax,word2vec 最后的叶子节点是词典中的单词;而fasttext 针对多类有监督训练,将最后的叶子节点改为标签,并且基于哈夫曼树,类别多的标签的路径比较短。

使用 fasttext 进行文本分类的时候,其核心思想是 将整篇文档的词及n-gram向量叠加平均得到文档向量,然后使用文档向量做softmax多分类。

** 层次softmax**

softmax 是在 逻辑回归 (logistic regression) 在多分类任务上的推广,是网络中的最后一层。当 词汇数量V 较大时候,softmax 的计算代价是很大的, O(v) 量级。层次softmax 是将全局多分类转化成了若干个二分类问题,从而将时间复杂度从O(V) 转化成了 O(log V)。

缺点:fastText适用与分类类别非常大而且数据集足够多的情况,当分类类别比较小或者数据集比较少的话,很容易过拟合。

faiss

用途:相似度检测和稠密向量的聚类。

Faiss is a library for efficient similarity search and clustering of dense vectors.

之前的实习经历主要是用faiss 处理文本的representation,但是这个是有偏差的,凡是能够打成词向量,都是可以使用faiss 进行计算的,当然这词向量需要满足:相近内容在相近的空间。

Once the vectors are extracted by learning machinery (from images, videos, text documents, and elsewhere), they’re ready to feed into the similarity search library.

faiss的实现过程

首先使用 index对于向量进行预处理,然后选择不同的模式. 主要讲的是三种模式,一个维度是简单模式,适合在小数据上进行计算 欧氏距离;一个维度是加快检索速度,这种模式下是需要提前的train,其基本的思路对向量进行聚类,当然文中说的是 “细胞”,建立倒排索引,然后检索的时候,搜索这个“细胞”内 和周围的“细胞” 的id 的集合,就可以返回前 K 个最相近的结果;最后一个维度是减少内存的使用,上面两种都是使用的完整的向量,这个模式下是使用的压缩向量,可以使用PCA 进行实现,当然这个模式下得到的结果也是近似解。还有两种计算的上的优化,对于向量进行分段计算,这种可以实现并行,并且支持任务在GPU 上进行运算。

牺牲了一些精确性来使得运行速度更快。

Similarity search can be made orders of magnitude faster if we’re willing to trade some accuracy; that is, deviate a bit from the reference result. For example, it may not matter much if the first and second results of an image similarity search are swapped, since they’re probably both correct results for a given query. Accelerating the search involves some pre-processing of the data set, an operation that we call indexing.

( 下面这句话的观点是什么,感觉不知道逻辑在哪里啊) 向量的比较有两种metric:一种是L2 一种是基于consine (点乘)进行检索。前者是求解最小的值,后者是通过inner——product 求解maximum. 并且是支持GPU的,在原来CPU上建立的index,然后很好的迁移到 GPU上。

faiss 中的三种基本索引

- IndexFlatL2

基于brute-force计算向量的L2距离,就是暴搜。检索速度慢,适用于小数据量。 在计算上进行了优化,比如使用堆存储结构,寻找最接近的 K 个元素时候后,进行分段计算,把 d 维向量分成几段分别进行计算;建立倒排索引( id -contents) ,先使用聚类,然后再类内和相近的类进行寻找而非整个空间。

|

|

- IndexIVFFlat (加速搜索)

对于暴搜来说,海量数据搜索速度太慢,那么需要预训练把向量都聚类。这里使用IndexIVFFlat来加快搜索速度。IndexIVFFlat是faiss的倒排索引,把数据构成的向量空间切割为Voronoi细胞,每个向量落入其中一个Voronoi细胞中。在搜索时,只有查询x所在细胞中包含的数据库向量y与少数几个相邻查询向量进行比较。

训练的时候还需要有一个量化器,用于决定以什么方式将向量分配给Voronoi细胞。每个细胞由一个质心定义,找到一个向量所在的Voronoi细胞包括在质心集中找到该向量的最近邻居。

搜索方法有两个参数:

- nlist 划分Voronoi细胞的数量

- nprobe 执行搜索访问的单元格数(不包括nlist),该参数调整结果速度和准确度之间折中的一种方式。如果设置nprobe=nlist则结果与暴搜一致。

加快索引的方式之一,与暴搜对比就是需要train,把向量空间下的数据切割为Voronoi细胞,检索只对向量所在细胞和周围细胞进行检索。

|

|

- IndexIVFPQ (减少内存使用)

上面两种索引都是存储的完整向量,下面介绍一种压缩向量的方法。IndexIVFPQ基于PQ (Product Quantizer)算法压缩向量。在这种情况下,由于向量没有精确存储,搜索方法返回的距离也是近似值。上面我们看到的索引IndexFlatL2和IndexIVFFlat都会全量存储所有的向量在内存中,为满足大的数据量的需求,faiss提供一种基于Product Quantizer(乘积量化)的压缩算法编码向量大小到指定的字节数。此时,存储的向量时压缩过的,查询的距离也是近似的。

原理:简单来说就是通过PCA将高纬空间转换成低维空间。 原来的数据 train 得到一个转换矩阵P,然后这个矩阵和原来的数据X得到新的降维之后的Y ($PX =Y$)。这样转换过程中信息损失的更少,在faiss 中使用 train() 函数进行实现。

|

|

在涉及index使用考虑速度,acc和内存大小三个不同的维度。然后不同的index 是有不同的侧重的。

** Take Off** (这个是有三方面需要权衡的: query time、 query accuracy and preprocessing time)

As with anything, there is a tradeoff between improving query time versus query accuracy versus preprocessing/index build time versus data storage:

no build time, high query time, high storage, exact accuracy: Faiss IndexFlat

low build time, med query time, high storage, high accuracy: Faiss IndexIVFFlat

med build time, low query time, low-med storage, med-high accuracy: Faiss IndexIVFPQ

very high build time, low query time, low-high storage (whether stored as a k-NN graph or raw data), high accuracy: NN-Descent by Dong et al. (e.g., nmslib)

IndexIVFPQ with perhaps IMI is typically what we concentrate on, seems to be a reasonable sweet spot for billion-scale datasets.

product quantization 算法

这里的乘积是指笛卡尔积(Cartesian product),意思是指把原来的向量空间分解为若干个低维向量空间的笛卡尔积,并对分解得到的低维向量空间分别做量化(quantization)。这样每个向量就能由多个低维空间的量化code组合表示。

The idea is to decomposes the space into a Cartesian product of low dimensional subspaces and to quantize each subspace separately. A vector is represented by a short code composed of its subspace quantization indices.

** Image Vector Dataset**: 存储的是离 embedding 最近的centroid (质心) 的编号 而非向量本身。

Let’s say you have a collection of 50,000 images, and you’ve already performed some feature extraction with a convolutional neural network, and now you have a dataset of 50,000 feature vectors with 1,024 components each.

The first thing we’re going to do is compress our dataset. The number of vectors will stay the same, but we’ll reduce the amount of storage required for each vector. Note that what we’re going to do is not the same as “dimensionality reduction”! This is because the values in the compressed vectors are actually symbolic rather than numeric, so we can’t compare the compressed vectors to one another directly.

Two important benefits to compressing the dataset are that (1) memory access times are generally the limiting factor on processing speed, and (2) sheer memory capacity can be a problem for big datasets.

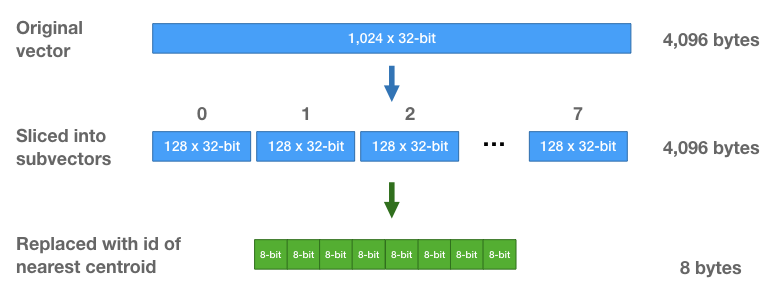

Here’s how the compression works. For our example we’re going to chop up the vectors into 8 sub-vectors, each of length 128 (8 sub vectors x 128 components = 1,024 components). This divides our dataset into 8 matrices that are [50K x 128] each.

These centroids are like “prototypes”. They represent the most commonly occurring patterns in the dataset sub-vectors.

We’re going to use these centroids to compress our 1 million vector dataset. Effectively, we’re going to replace each subregion of a vector with the closest matching centroid, giving us a vector that’s different from the original, but hopefully still close.

Doing this allows us to store the vectors much more efficiently—instead of storing the original floating point values, we’re just going to store cluster ids. For each subvector, we find the closest centroid, and store the id of that centroid.

Each vector is going to be replaced by a sequence of 8 centroid ids. I think you can guess how we pick the centroid ids–you take each subvector, find the closest centroid, and replace it with that centroid’s id.

Note that we learn a different set of centroids for each subsection. And when we replace a subvector with the id of the closest centroid, we are only comparing against the 256 centroids for that subsection of the vector.

Because there are only 256 centroids, we only need 8-bits to store a centroid id. Each vector, which initially was a vector of 1,024 32-bit floats (4,096 bytes) is now a sequence of eight 8-bit integers (8 bytes total per vector!).

总的来说faiss 高效实现了PCA 算法, k-means 算法 和PQ 算法。

文章作者 jijeng

上次更新 2019-03-25