Gnn

文章目录

Graph neural networks (GNN), deep learning architectures for graph-structured data.(待补充代码实践)

GNN 出现的背景

There is an increasing number of applications where data are generated from non-Euclidean do-mains and are represented as graphs with complex relationships and interdependency between objects.

- 基于图网络的结构的应用:比如社交网络、推荐系统中的商品和用户

- 上述图结构之间的距离不是基于欧氏距离

The success of deep learning in many domains is partially attributed to the rapidly developing computational resources (e.g., GPU), the availability of big training data, and the effectiveness of deep learning to extract latent representations from Euclidean data (e.g., images, text, and videos) 过往机器学习/ 深度学习成功的原因。

Furthermore, a core assumption of existing machine learning algorithms is that instances are independent of each other. 深度学习/ 机器学习依赖的条件是样本相互独立,但是很多问题(社交网络)不是这样的。

GNN 的目标:

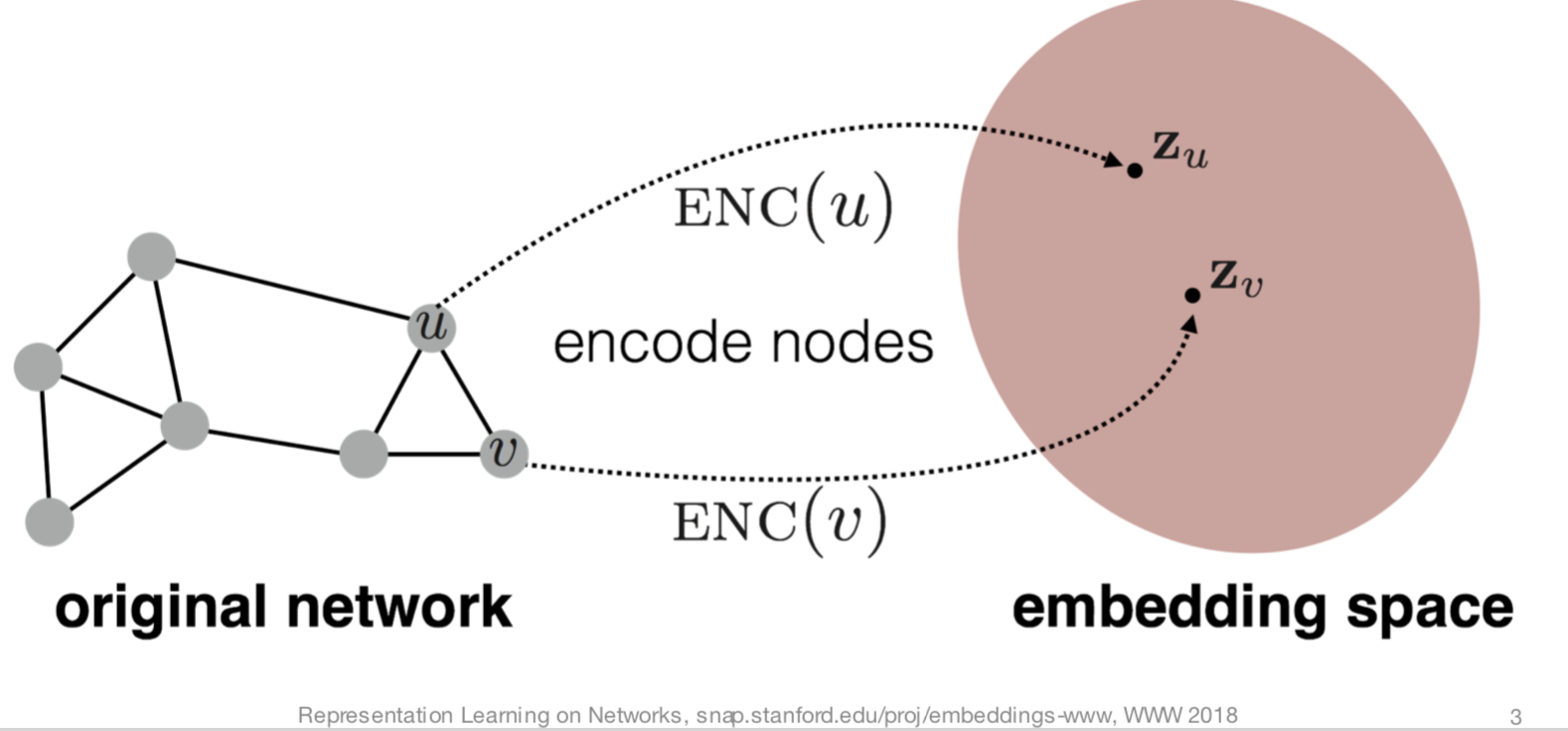

Goal is to encode nodes so that similarity in the embedding space (e.g., dot product) approximates similarity in the original network. embedding之后得到的相似度和原始图网络结构中的距离相近。

两种基本的算法

DeepWalk

DeepWalk is the first algorithm proposing node embedding learned in an unsupervised manner. It highly resembles word embedding in terms of the training process. The motivation is that the distribution of both nodes in a graph and words in a corpus follow a power law as shown in the following figure: 把 Deep Walk(游走)和NLP 任务结合起来的motivation 就是:前者的分布规律和NLP 中的句子序列在语料库中出现的规律有着类似的规律。

在图结构中,随机游走中节点的频率在极其稀疏的图结构中服从幂法则,这一点与语言模型中的词频相似。

(1)算法步骤

主要分为两个步骤

- Perform random walks on nodes in a graph to generate node sequences

- Run skip-gram to learn the embedding of each node based on the node sequences generated in step 1 随机选择一个起始点和规定的路径长度,昌盛一个序列;一条路径上临近点和点之间必然是有着共同的特征,使用skip-gram 的思路进行训练

(2)随机游走的序列有如下几个特点:

- 捕捉了图中的局部特征

- 容易进行并行运算,同时选择 N 个节点进行遍历

(3)缺点

However, the main issue with DeepWalk is that it lacks the ability of generalization. Whenever a new node comes in, it has to re-train the model in order to represent this node (transductive). Thus, such GNN is not suitable for dynamic graphs where the nodes in the graphs are ever-changing. 当图发生变化的时候,需要重新计算整个图

GraphSage

(待补充)

应用场景

可以看出,GNN 的应用场景比较偏向于数据内部之间的关系,无论是CV 中的 scene graph generation还是NLP 中的句子关系抽取。比较关注在文本分类或者泛NLP 中的应用。

(1)CV

Computer vision Applications of GNNs in computer vision include scene graph generation, point clouds classification, and action recognition.

(2)NLP

Natural language processing A common application of GNNs in natural language processing is text classification. GNNs utilize the inter-relations of documents or words to infer document labels. Generating a semantic or knowledge graph given a sentence is very useful in knowledge discovery.

(3)推荐系统

Graph-based recommender systems take items and users as nodes. By leveraging the relations between items and items, users and users, users and items, as well as content information, graph-based recommender systems are able to produce high-quality recommendations. The key to a recommender system is to score the importance of an item to a user. As a result, it can be cast as a link prediction problem.

(3)开源框架

- Noticeably, Fey et al. [92] published a geometric learning library in PyTorch named PyTorch Geometric 4, which implements many GNNs.

- Most recently, the Deep Graph Library (DGL) 5 [133] is released which provides a fast implementation of many GNNs on top of popular deep learning platforms such as PyTorch and MXNet.

参考文献

从图(Graph)到图卷积(Graph Convolution):漫谈图神经网络模型 Hands-on Graph Neural Networks with PyTorch & PyTorch Geometric nrltutorial A Comprehensive Survey on Graph Neural Networks

文章作者 jijeng

上次更新 2020-01-02