首先介绍了信息检索中两种匹配方式:精确匹配和最好匹配,然后介绍在实现过程中涉及到的boolean retrieval 和倒排索引,实现了基于set 的搜索引擎和boolean retrieval的算法。

精确匹配(exact match)

精确匹配(exact match)的特点如下:

- query specifies precise retrieval criteria

- every document either matches or fails to match query

- result is a set of documents (Unordered in pure exact match)

(1)优点

- Can be very efficiently implemented

- Predictable, easy to explain

(2)缺点

- Difficulty increases with collection size

- Indexing vocabulary same as query vocabulary

- Acceptable precision generally means unacceptable recall

(3)代码实现

基于set 实现的搜索引擎

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

|

import nltk

from collections import defaultdict

from nltk.stem.snowball import EnglishStemmer

class Index:

# init 里面更多的是全局变量的定义;函数才是操作的定义

def __init__(self, tokenizer, stemmer =None, stopwords =None):

self.tokenizer =tokenizer

self.stemmer =stemmer # 词干提取,将词还原成 词本来的形式

self.index =defaultdict(list)

self.documents = {}

self.__unique_id =0

if not stopwords:

self.stopwords =set()

else:

self.stopwords =set(stopwords)

def lookup(self, word):

word =word.lower()

if self.stemmer:

word =self.stemmer.stem(word)

return [ self.documents.get(id, None) for id in self.index.get(word)]

# add 是维护两个操作, 一个是documents 的操作,一个是 index的操作

def add(self, document):

# 维护(关键词 , index列表) 的dictionary;对于每一个关键词,都需要加上当前的document 的id

for token in [t.lower() for t in nltk.word_tokenize(document)]:

if token in self.stopwords:

continue

if self.stemmer:

token =self.stemmer.stem(token)

# 这个不是很理解 逻辑,想要干什么

if self.__unique_id not in self.index[token]:

self.index[token].append(self.__unique_id)

# 下面是documents 维护,每一来一个文档,就加了进去

self.documents[self.__unique_id] =document

self.__unique_id +=1

index =Index(nltk.word_tokenize, EnglishStemmer(), nltk.corpus.stopwords.words('english'))

#TOP10 Dire straits

index.add('Industrial Disease')

index.add('Private Investigations')

index.add('So Far Away')

index.add('Twisting by the Pool')

index.add('Skateaway')

index.add('Walk of Life')

index.add('Romeo and Juliet')

index.add('Tunnel of Love')

index.add('Money for Nothing')

index.add('Sultans of Swing')

# TOP10 Led Zeppelin

index.add('Stairway To Heaven')

index.add('Kashmir')

index.add('Achilles Last Stand')

index.add('Whole Lotta Love')

index.add('Immigrant Song')

index.add('Black Dog')

index.add('When The Levee Breaks')

index.add('Since I\'ve Been Lovin\' You')

index.add('Since I\'ve Been Loving You')

index.add('Over the Hills and Far Away')

index.add('Dazed and Confused')

# Let's make some queries:

print( index.lookup('loves'))

# ['Tunnel of Love', 'Whole Lotta Love', "Since I've Been Loving You"]

print( index.lookup('loved'))

# ['Tunnel of Love', 'Whole Lotta Love', "Since I've Been Loving You"]

print (index.lookup('daze'))

# ['Dazed and Confused']

print (index.lookup('confusion'))

# ['Dazed and Confused']

|

python 中set 操作是两两相交进行的,所以执行顺序会对操作时间造成很大的影响。尽可能使得前面的结果产生的结果小,这样之后的结果只会更小。

1

2

3

4

|

timeit set.intersection(*(inverted_index[c] for c in "aje"))

1000 loops, best of 3: 366 µs per loop

timeit set.intersection(*(inverted_index[c] for c in "aej"))

10 loops, best of 3: 23.1 ms per loop

|

所以,如果是基于set 结构实现的,那么优化的原则是:每一次操作的中间结果越小越好。最开始选择最小的,可以先遍历一遍所有的query 命中的doc的数量,然后选择命中数量最少的进行set 操作。

最好匹配(best match)

Best-match or ranking models are now more common

Boolean or structured queries can be part of a best-match retrieval model

最好匹配(best match)的特点

- Query describes good or “best” matching document

- Every document matches query to some degree

- Result is ranked list of documents

(1)优点

- Significantly more effective than exact match

- Similar efficiency (based on inverted file implementations)

- Easier to use (supports full text queries)

(2)缺点

- More difficult to convey an appropriate cognitive model (“control”)

- Full text does not mean natural language understanding (no “magic”)

(3)Boolean Retrieval

Many users prefer Boolean. On Google, the default interpretation of a query $[w_1, w_2, \dots , w_n ]$ is $w_1$ AND $w_2$ AND … AND $w_n$。如果没有得到你想要的结果(在Google 上,不是百度),那么可能是以下原因:

- 搜索结果中含有 variant of $w_i$

- 很长的query 以至于无法解析

- bool expression generates very few hits

Simple Boolean vs. Ranking of result set

- Simple Boolean retrieval returns matching documents in no particular order

- Google (and most well designed Boolean engines) rank the result set - they rank good hits higher than bad hits

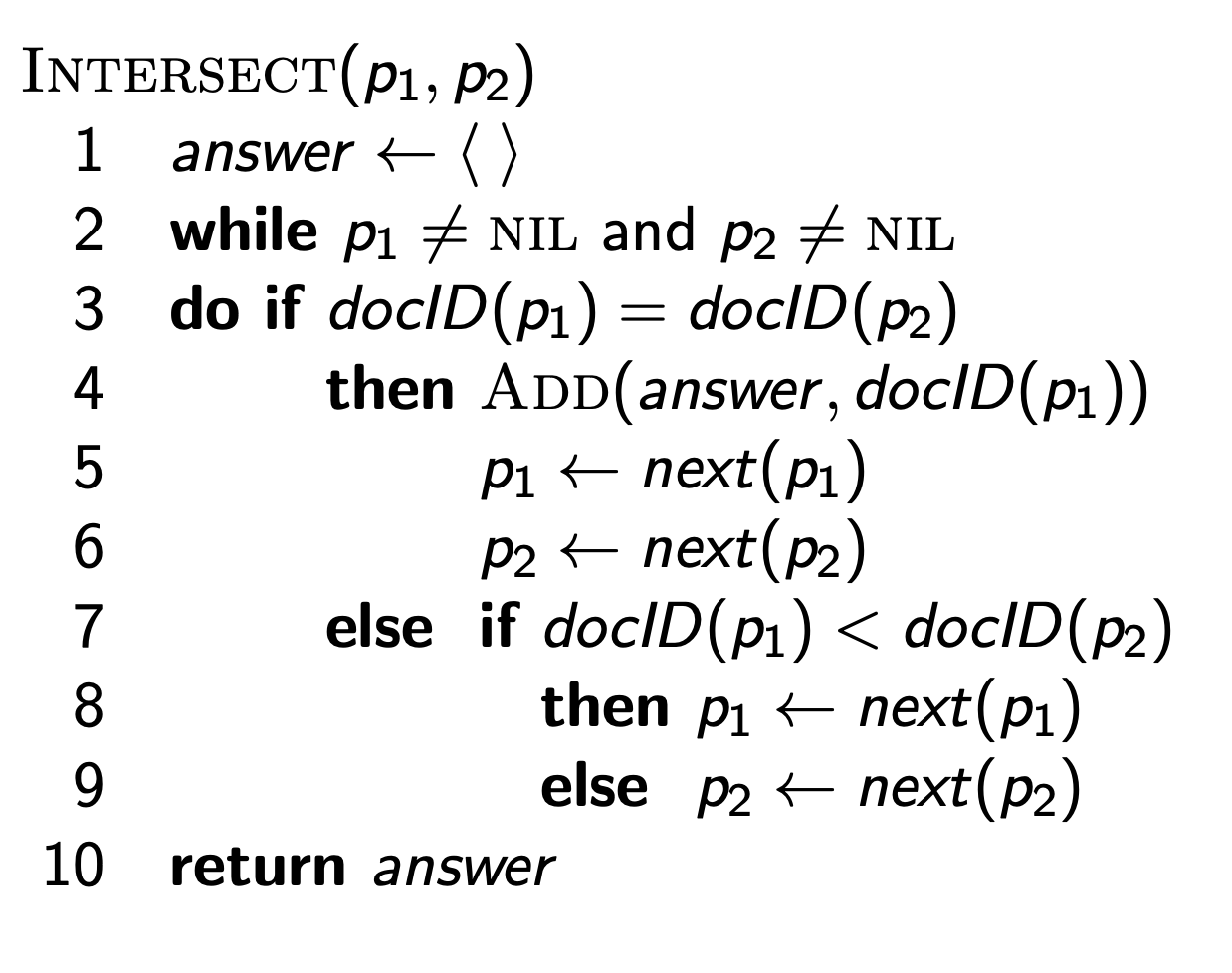

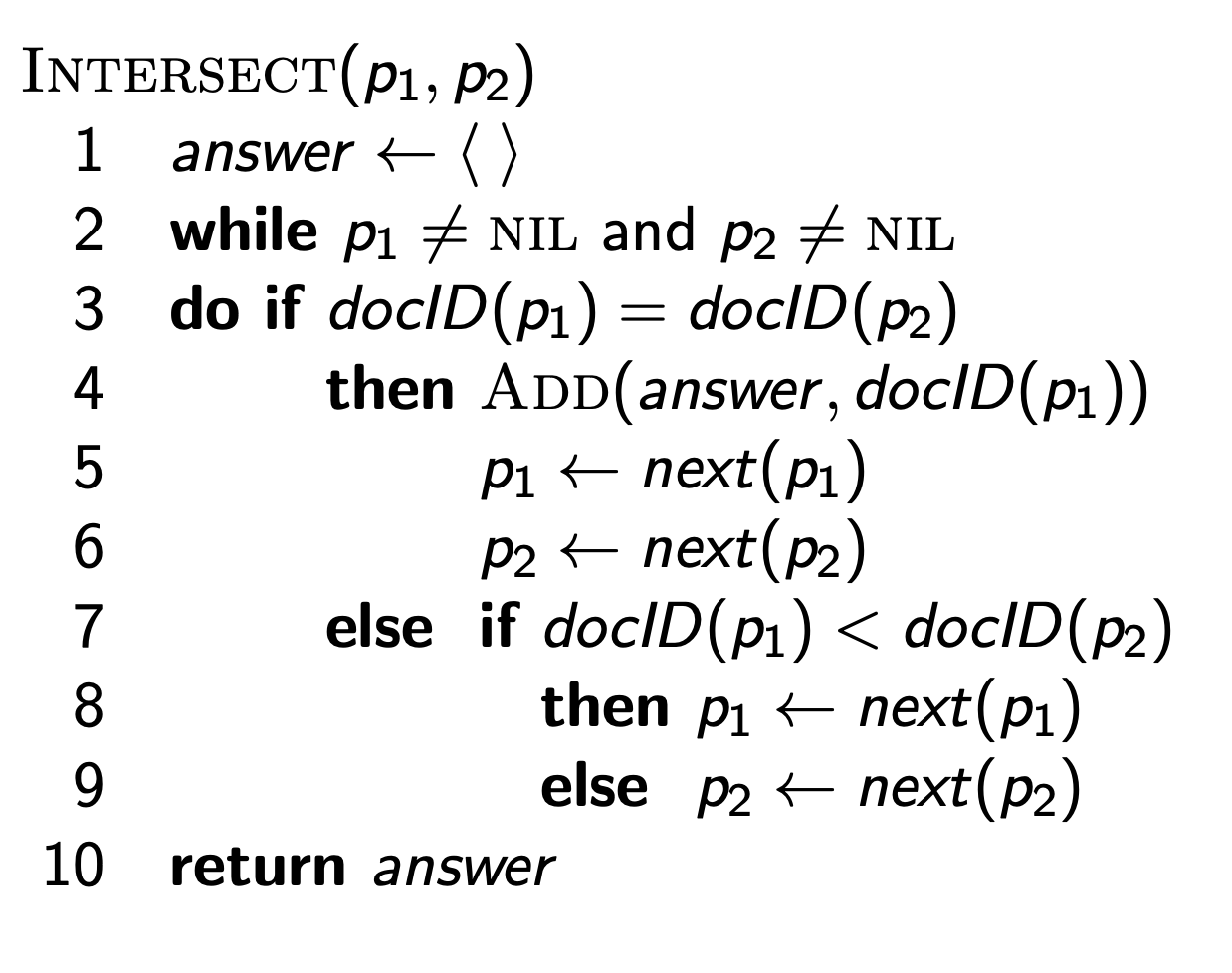

Boolean Retrieval伪代码如下:

`

`

上面的问题是两个有序表的合并算法,使用双指针,时间复杂度是$O(m +n)$。使用c语言实现。

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

|

// p1,p2 是指向两个posting 链的指针

Posting intersect(p1, p2)

{

Posting answer;

while(p1 != null && p2!= null)

{

if(p1 ==p2)

{

add(answer, p1->docID);

p1 =p1->next;

p2 =p2->next;

}

else if (p1 >p2) p2 =p2 ->next;

else p1 =p1->next;

}

}

|

(4)Inverted Index

In computer science, an inverted index (also referred to as a postings file or inverted file) is a database index storing a mapping from content, such as words or numbers, to its locations in a table, or in a document or a set of documents (named in contrast to a forward index, which maps from documents to content).

倒排索引是一种数据库索引方式,存储了从 内容(关键字或者数字)到其位置的映射关系。该种索引方式和正排索引相反。该种数据结构是典型的搜索引擎检索算法重要的部分。

索引构建过程

1). 词条序列: 从文档文件到 (关键字,DocID)的转换

2). 按照词项排序:每个关键词按照DocID 排序

3). 词典和倒排记录表:

- 某关键词在单片文档中出现就会被合并

- 拆分为词典和倒排记录表两部分

- 每个关键词出现的文档数目(docFrequency, DocIDs) 被加入

`

`

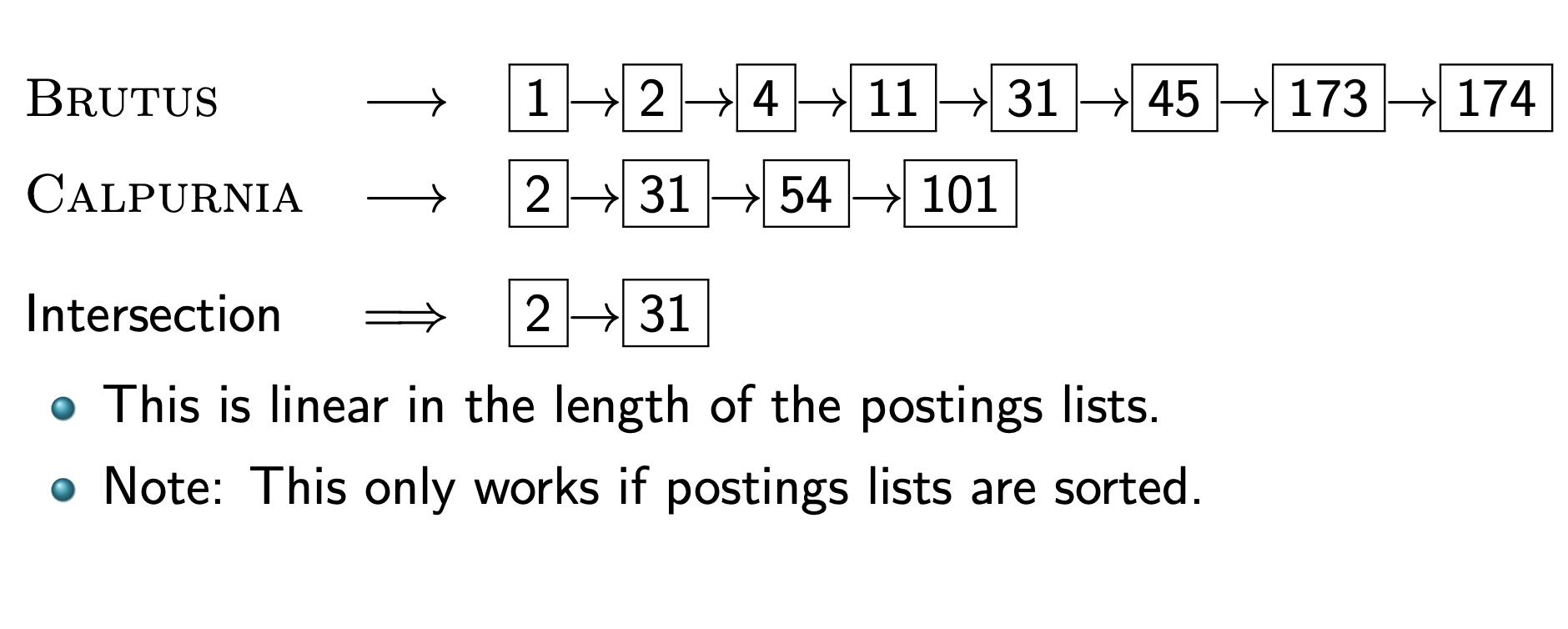

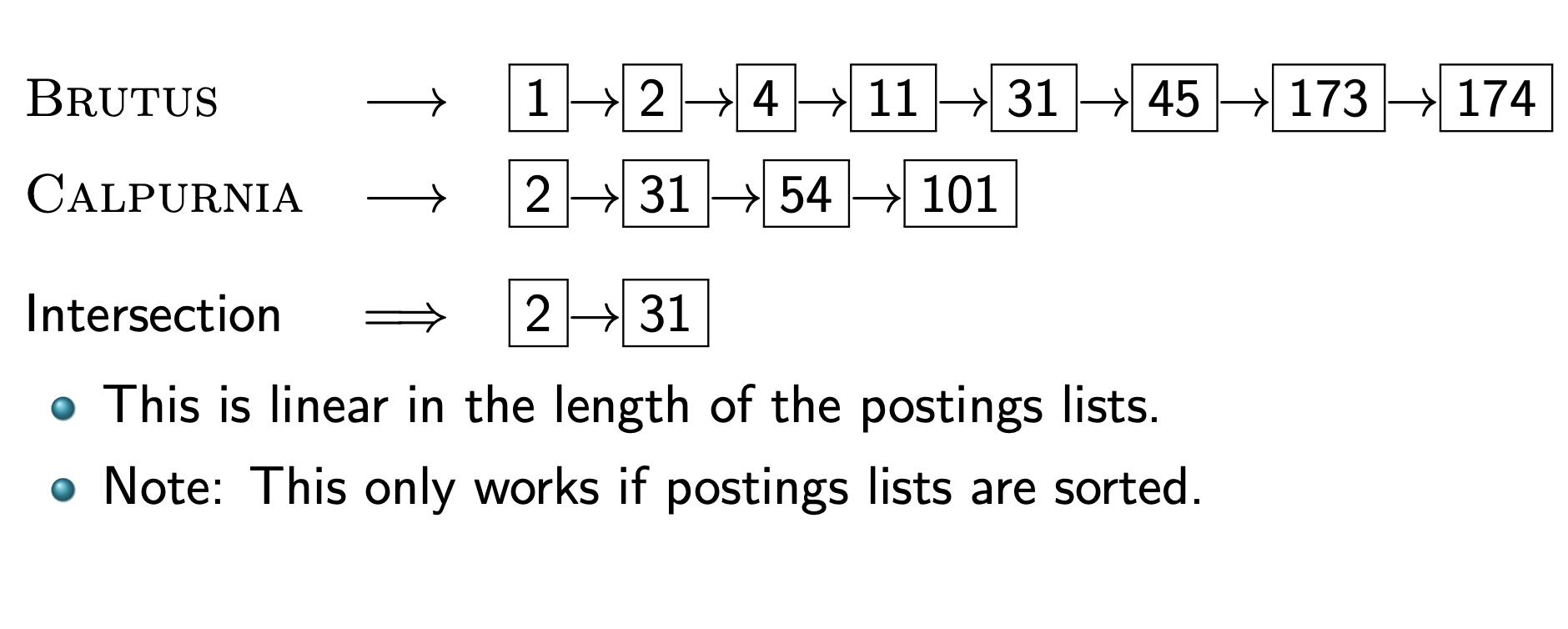

所以说如果以关键词 BRUTUS 和CALPURNIA 得到记录表,那么如何得到最后的包含两者的文档列表呢?这里就用到了上面所说的Bool Retrieval。

`

`

第三种方式将精确匹配和最好匹配结合起来。

项目中出现的一些好的代码,总结整理。

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

|

def assert_dir(path):

if not os.path.exists(path):

print("ERROR : {} does not exists".format(path))

sys.exit(1)

if not os.path.isdir(path):

print("Error: {} is not a directory".format(path))

sys.exit(1)

def preprocess_text(text):

processed_text =text.lower()

non_words =re.compile(r"[^A-Za-z]+")

processed_text =re.sub(non_words, ' ', processed_text)

for doc_file in os.listdir(doc_path):

filename =os.path.join(doc_path, doc_file)

text = get_text_from_file(filename)

return processed_text

def writeDown(docs_path, data_path):

with open(doc_path, mode ='w') as f:

for word in inverted_index.keys():

f.write(word +'\n')

# python 中序列化数据存储(二进制)

# pickle模块只能在python中使用,python中几乎所有的数据类型(列表,字典,集合,类等)都可以用pickle来序列化,

with open(inverted_index_file, mode ='wb') as f:

pickle.dump(inverted_index, f)

result =None

for word in words:

if result is None:

result =inverted_index.get(word)

else:

result.intersection_update(inverted_index.get(word))

x ={'a', 'b', 'c'}

y ={'c', 'd', 'e'}

z= {'f', 'g', 'c'}

x.intersection_update(y, z) # output: c

|

有时候需要进行多个文件的合并或者拆分,此时需要打开多个文件。python 中有种简洁的写法

1

2

3

4

5

|

with open(file1) as f1, open(file2) as f2, open(file3) as f3:

for i in f1:

j =f2.readline()

k =f3.readline()

print(i, j, k)

|

或者还可以写成

1

2

3

4

5

6

|

from contextlib import nested

with nested(open(file1), open(file2), open(file3)) as (f1, f2,f3):

for i in f1:

j =f2.readline()

k =f3.readline()

pirnt(i, j, k)

|

参考文献

Inverted index

Introduction to Information Retrieval

`

` `

` `

`